We’re moving from an era of Search Engine Optimisation (SEO) to one of Generative Engine Optimisation (GEO). That means traditional search might send you less traffic while AI assistants ingest and summarise your articles. Do you want to be part of that future?

Understanding AI crawlers

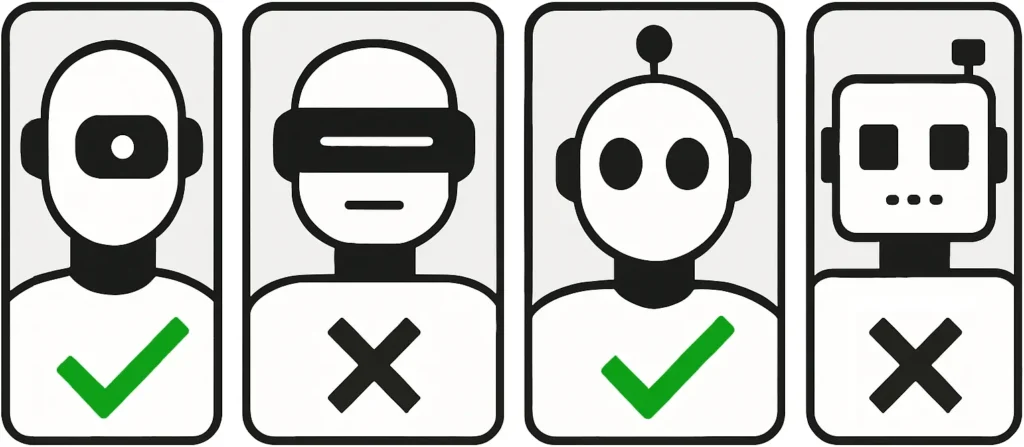

Not all bots are built the same. Traditional search engine crawlers index your pages for ranking in search results. Today’s AI landscape includes:

- LLM data scrapers – bots like GPTBot and CCBot vacuum up as much content as possible to train large language models. They do not index pages for search results; they ingest data for training.

- AI search & assistants – bots such as ChatGPT‑User or PerplexityBot fetch specific pages on demand, often citing your site as a source. They behave more like search engines and place less strain on servers.

- AI agents – a new breed of bots (for example ChatGPT‑Operator) that can interact with your site like a user: filling in forms, booking appointments and completing tasks autonomously.

Sample robots.txt rules

If you decide to keep certain AI bots out, the first line of defence is your robots.txt file. Here’s how to politely ask specific crawlers to stay away:

# Block OpenAI bots User-agent: ChatGPT-User Disallow: / User-agent: GPTBot Disallow: / # Block Common Crawl User-agent: CCBot Disallow: / Note: robots.txt is only a polite request. Some bots ignore it and crawl anyway, so don’t rely on this method alone.

Should you allow or block AI crawlers?

There’s no one‑size‑fits‑all answer. It depends on the type of bot, your content, and your goals.

Reasons to block LLM data scrapers

- Protect exclusive content – keep paid or unique articles from being reproduced in AI outputs without permission.

- Reduce server load – scraping bots can crawl thousands of pages, slowing down your site and increasing hosting costs.

- Control sensitive data – prevent proprietary information or copyrighted materials from being absorbed into training datasets.

Reasons to allow LLM data scrapers

- Brand awareness – appearing in AI‑generated answers can establish your site as a source of authority, even without a direct link.

- Manageable costs for small sites – if your content isn’t exclusive and your traffic is modest, the benefits of reach may outweigh the cost.

- Future search visibility – generative engines may become the primary way people seek information, so appearing in their knowledge bases could be valuable.

AI search & assistants: usually worth allowing

Bots that fetch information on demand, such as ChatGPT’s browsing mode or PerplexityBot, request pages only when a human asks a question. They often cite your site, giving you visibility with minimal server load. For most Joomla websites, allowing these makes sense.

AI agents: proceed with caution

Agents can perform complex tasks on your website. If you run an e‑commerce shop, a shopping agent might drive sales. But on a membership site, an automated bot could create unwanted accounts or generate fake orders. Assess the risk and configure your robots.txt or security tools accordingly.

Beyond robots.txt: taking control of AI access

If you need finer control, consider these measures:

- Block by IP – ban known bot IP ranges when you discover unwanted crawlers ignoring your rules.

- Use the TDM Reservation Protocol – publish a

tdmrep.jsonfile in your/.well-knownfolder and add TDM meta tags in your pages to specify your text and data mining policy. This W3C standard helps compliant bots understand your terms. - Leverage infrastructure providers – services like Cloudflare now offer one‑click AI bot blocking, a pay‑per‑crawl system and managed enforcement of robots.txt. These tools can keep rogue bots out while letting approved crawlers in.

- Monitor and adapt – review your server logs to see which bots are visiting. Adjust your policies as new crawlers appear and as your content strategy evolves.

Our agency’s view

We believe Joomla site owners need to take a deliberate stance on AI crawlers. Allowing everything may dilute your brand and expose sensitive data; blocking everything might make you invisible in future generative search results.

We’re convinced that GEO will gradually eclipse SEO. As users turn to AI assistants for answers, your content could be summarised without a click. Whether this is bad or good for your business depends entirely on your business model. Choosing which bots can access your site is now part of your marketing and legal strategy.

There’s no shame in saying “no” to indiscriminate scraping, but don’t shut the door on valuable AI search bots that could drive traffic.

Key takeaways

- AI bots fall into three categories: data scrapers, search/assistants and agents.

- Blocking LLM scrapers protects exclusive content and server resources, but limits exposure.

- Allow search & assistants bots for direct citations and low server load.

- Use robots.txt, IP blocks, TDMRep and hosting tools like Cloudflare to control access.

- Adapt your strategy to the emerging Generative Engine Optimisation era.